Dijkstra's Algorithm

Quick Recap on Dijkstra's:

- no negative-weight edges

- weighted version of breadth-first-search

- instead of FIFO queue, use priority queue

- keys are shortest-path weights

- two sets of vertices

- vertices whose final shortest-path weights are already found

- priority queue = vertices still in the queue, those we still need to evaluate their shortest path

- greedy-choice: at each step we choose the light edge

Code#

def init_single_source(vertices, source_node):

for v in vertices:

d[v] = inf

p[v] = nil

d[s] = 0

def relax(u, v, w):

if d[v] > d[u] + w(u, v):

d[v] = d[u] + w(u, v)

p[v] = u

def dijkstra(V, E, w, s):

init_single_source(V, s):

S = set() # init empty

Q = priority_queue(V)

while Q is not empty:

u = extract_min(Q)

S = S.add(u)

for v in adj_list[u]:

relax(u, v, w)Mathematical Context For Path Properties#

First, let's cover the working parts & properties:

Triangle Inequality#

The Triangle Inequality states that for all , we have .

Proof#

- we have a path , as well as a shortest path

- the weight of the shortest path is any path

- let us say that the path

- this means if we use the shortest path and direct edge , then the weight along

Upper Bound Property#

The Upper Bound Property states that we always have . Once , it never changes.

Proof by Contradiction on Inequality#

- let's assume this starts initially true

- then, assume

- this is the first instance of it happening

We know that this can't have happened at initialization, because init_single_source() sets all , therefore this must have happened at some point during the algorithm's run time.

Let be the vertex that causes to change, since in order for us to have altered , relax(u, v, w) must have been called.

Within relax(u, v, w), is altered only if:

d[v] > d[u] + w(u,v)evaluates to true.- if so,

d[v] = d[u] + w(u, v)is the change made to

Recall our initial assumption, we have:

- via Triangle Inequality,

- , since was the first vertex where its estimate was less than the shortest path, meaning:

- this results in the full inequality:

However, this is impossible, since relax(u, v, w) set , and nothing can be equal and be explicitly less than something else simultaneously.

Thus, we have proved .

No-Path Property#

The No-Path Property states that if , then will always equal

Proof#

- via the Upper Bound Property,

- this means

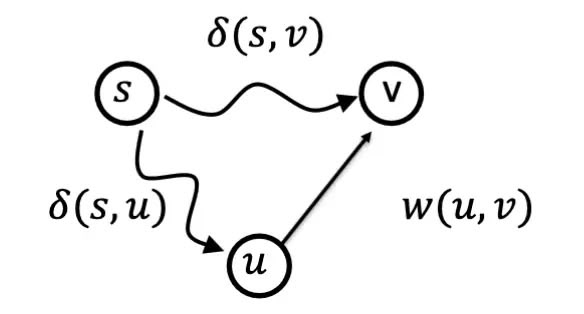

Convergence Property#

The Convergence Property states that if:

- we have a path – (it is a shortest path)

- we call

relax(u, v, w),

then afterward.

Proof#

We relax within this code:

if d[v] > d[u] + w(u, v):

d[v] = d[u] + w(u, v)

p[v] = uAfter this code, , because when entering relax(u, v, w):

- if was – we would bypass the if-condition, and nothing happens

- if was , then it is set

The only two cases resulting in .

We can take the RHS and simplify it, as we have defined :

Since we defined to be a shortest path, meaning:

Finally, by the Upper Bound Property, we know that . This means we must have .

Path Relaxation Property#

Let be a shortest path from to . Relaxing these edges, in order, will ensure that . (The shortest path estimate at is the correct one).

Proof by Induction#

We will show via induction on the number of vertices that after the edge is relaxed.

Base Case: , and .

At initialization in init_single_source(), we set .

Inductive Step: Assume .

As we relax edge , note that we have met the pre-conditions for the Convergence Property:

- we have a shortest path

- by optimal substructure, the path must also be a shortest path

- we have

- we are now calling

relaxon

hence, converges to be and never changes.

We have proved by induction that if we relax the edges in order.

Dijkstra's Proof#

via Loop Invariant#

We will prove via a Loop Invariant that Dijkstra's Algorithm is correct.

def dijkstra(V, E, w, s):

init_single_source(V, s):

S = set() # init empty

Q = priority_queue(V)

while Q is not empty:

u = extract_min(Q)

S.add(u)

for v in adj_list[u]:

relax(u, v, w)Loop Invariant: At the end of each iteration of the while loop,

Initialization#

At initialization, is an empty set, and so the loop invariant holds as a by-product of having no yet.

Maintenance#

Show that when is added to in each iteration.

We will prove the maintenance property through contradiction:

Assume that for the first time, after an iteration on some vertex , we have added to , and .

What do we know?

For starters, we know that , as . This means that and when is added.

We also know, by the No-Path Property, there exists some path . Otherwise, the property states that:

which contradicts our assumption that .

Since there exists a path , there must exist a shortest path, , from .

Allow us to decompose into , such that , , and edge is the edge crossing the two sets .

Claim: when is added to

Proof:

- by optimal substructure, any subpath within , such as , is a shortest path as well

- we called

relaxon edge at the time of adding to- so by the Convergence Property,

This means that if , we have already reached a contradiction, as our initial assumption was:

and we have just proved that the estimate is the correct delta, and the proof is finished.

However, what if ? Can we still reach a contradiction?

Once again, we know that there exists a shortest path from via the No-Path Property, and that any subpath along is also a shortest path by optimal substructure. This implies a chain of logic:

- is a shortest path

- by our Claim,

- since there are no non-negative edge weights, a shortest path must be at least as long as , meaning:

-

- this is because we must pass to get to

- by the Upper Bound Property

Putting this all together:

Lastly, we know that:

- we are in the iteration of the while loop where we choose

- stores a vertex as a key-value pair

{ v : d[v] } extract_min(Q)chooses to extract the vertexvifd[v]is minimum across all estimates it finds inQ- both and were in when we chose

This means in order for to have been chosen, . We can conclude:

The estimate must be equal to the estimate if it is both and . This, again, contradicts our initial assumption that as , and by our initial Claim.

Termination#

At the end of the while loop, (which was equal to ) is now the . At each iteration, we added the current vertex to , meaning that now, . This implies that:

The loop variant has been shown to hold across initialization, maintenance, and termination, thus proving Dijkstra's Algorithm.